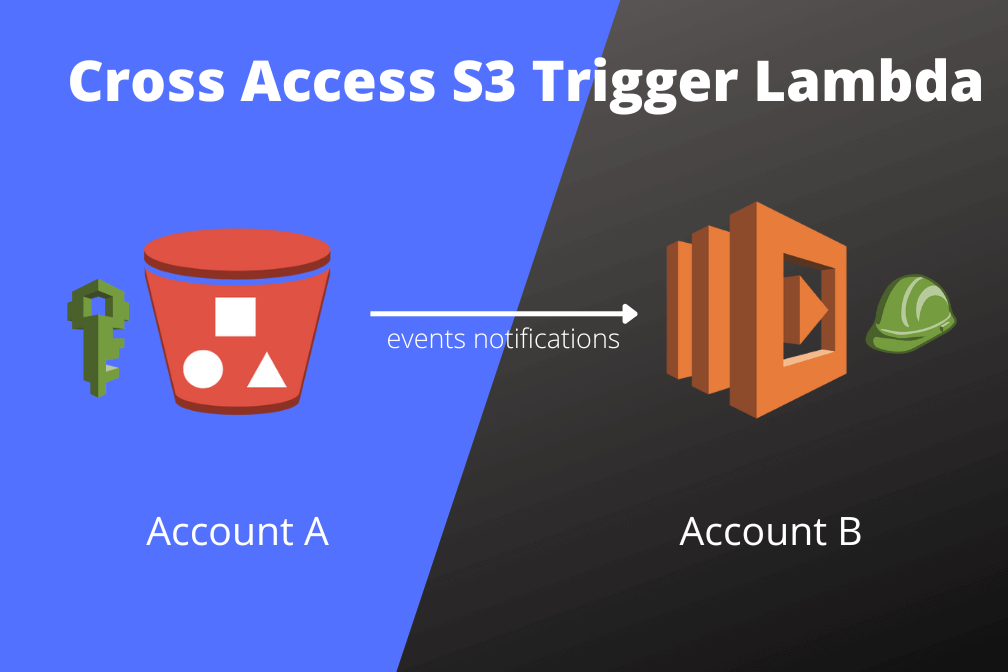

Key Highlights and What you’ll learn

- How to create S3 bucket on account id A

- How to create Lambda function on account id B

- How to setup s3 events notification to trigger lambda on another AWS account

- Understand IAM permissions to allow lambda read/write objects from s3 bucket on another AWS account

- Understand IAM permissions to read from KMS encrypted S3 bucket

- KMS key limitations

- Do and Don’ts & best practices!

- Troubleshooting issues

By the end of this tutorial, you’ll have an s3 bucket on account A, that will be able to trigger lambda on account B upon creation of every new object. Lambda on Account B will also be able to read objects from account A s3 bucket.

btw if you fancy watching video tutorial, click below;

Prerequisites

- Basic knowledge about AWS S3, Lambda and IAM (Identity & Access Management)

- Two AWS accounts (free tier will do!)

Create S3 bucket

On account A: An s3 bucket can be created by two major ways.

Using s3 console

- Sign in to the AWS Management Console and open the Amazon S3 console at https://console.aws.amazon.com/s3. For this tutorial, we assume bucket name as

maxcotec-s3-events-lambda-test - Choose Create bucket. The Create bucket wizard opens.

- In Bucket name, enter a DNS-compliant name for your bucket. Bucket name rules be according to AWS standards described here

Using AWS CLI

If you have aws cli installed, you can simply run following command from terminal. Don’t forget to replace <your-bucket-name>.

aws s3api create-bucket \ --bucket <your-bucket-name> \ --region eu-west-1

Your s3 bucket should be created by now. At this point, we are not going to set events notifications yet. Follow along…

Create Lambda function

On account B

- Sign in to the AWS Management Console and open the Amazon Lambda console at https://console.aws.amazon.com/lambda

- Choose Create function. A Create function wizard opens.

- Keep the default option selected Author from Scratch.

- Under basic information, give function name:

s3Events, Runtime: Python3.9, Architecture: x86_64 - Under Permissions, choose

create a new role from AWS policy templates, and Role name:Lambdas3EventsRole. Select `Amazon S3 object read-only permissions` from Policy templates deop down menu. - Choose

Create function

A new Lambda function will now be created with role LambdaS3EventsRole with two default policy 1.AWSLambdaBasicExecutionRole-*** and 2. AWSLambdaS3ExecutionRole-***. Add following code to lambda and click Deploy

import json

import urllib.parse

import boto3

print('Loading function')

s3 = boto3.client('s3')

def lambda_handler(event, context):

print("Received event: " + json.dumps(event, indent=2))

# Get the object from the event and show its content type

bucket = event['Records'][0]['s3']['bucket']['name']

key = urllib.parse.unquote_plus(event['Records'][0]['s3']['object']['key'], encoding='utf-8')

try:

response = s3.get_object(Bucket=bucket, Key=key)

print("CONTENT TYPE: " + response['ContentType'])

return response['ContentType']

except Exception as e:

print(e)

print('Error getting object {} from bucket {}. Make sure they exist and your bucket is in the same region as this function.'.format(key, bucket))

raise e

Grant lambda to receive notifications from s3

- Go to the configuration tab of lambda function. Choose Permissions and under

Resource-based policy, choose Add permissions - Select AWS service and fill the form as follows;

Be sure to change the Source account to the actual account ID of account A where your s3 bucket is present.

3. choose Save

You should now see an s3 trigger being attached with the lambda;

The error message You do not have permission to access the bucket AccessDenied is expected. That is because we haven’t given account B root user to access account A s3 bucket. This isn’t really needed because we are only going to grant this lambda role Lambdas3EventsRole access to s3 bucket, instead of the entire account B root user.

You may be getting confused here, but trust me, this is all going to make sense once completed. So follow along…

Setup s3 events notifications

On Account A

- Choose your s3 bucket > Properties > Events notifications > Create event notification

- Fill the form as follows;

Be sure to change the arn of lambda function. Under Event types we have selected only Put objects. This is going to generate the trigger upon creation of new object in s3 bucket. You can also set Prefix and Suffix to let s3 generate events on specific types of objects (more on this later).

3. choose Save changes. You should now see a green notification on top Successfully created event notification "TriggerLambdaOnAccountB"

Test S3 Events Notifications

Lets upload any file into our Account A’s s3 bucket and see events notification being generated that triggers lambda function on account B.

- upload a file to s3

2. As the upload completes, an s3 event should have already triggered lambda function on account A’s lambda. Lets view the lambda logs on cloudwatch and see if it really did. Go to account A’s lambda > Monitoring > View logs in cloudwatch. This should

open in a new tab, taking you to cloudwatch dashboard. Go to Logs > Log group, you should see logs under Logs streams;

NOTE: If you do not see any logs under Logs Stream, that means lambda was not triggered!

You can see the logs saying an object with key: image.png was uploaded to s3 bucket.

Lambda permisions to read/write from cross account S3 bucket

We can see an error from above logs An error occured (AccessDenied) when calling GetObject operation. Thats because our lambda function does not have permission to read from s3 bucket. In order to grant such permissions, we have to set policies on lambda as well as s3 bucket. AWS breaks this down into two terminologies, explained as follows;

Identity based policy

The Identity here means that an entity that is going to access the resource. Generally this identity is a user (IAM user), a role (IAM role), or a group (IAM groups). In our case, its the IAM role thats attached with the lambda. While creating lambda, we created a role Lambdas3EventsRole and already attached `read-only permissions` to it. Than why we still see this error ?

NOTE: if your s3 bucket is KMS encrypted, you have to attach an additional policy with this role to allow lambda to decrypt using KMS keys. e.g.

{

"Version":"2012-10-17",

"Statement":[

{

"Sid":"VisualEditor0",

"Effect":"Allow",

"Action":"kms:Decrypt",

"Resource":"<KMS-KEY-ARN>"

}

]

}

Resource based policy

The Resource here means the entity thats being accessed from an identity. In our case, S3 bucket is the resource, on which our lambda role identity is trying to access to. Go to the s3 bucket > Permissions > Bucket Policy > Edit. Add following policy, by replacing to correct <LambdaRoleArn> and <s3BucketArn>.

{

"Version":"2012-10-17",

"Statement":[

{

"Sid":"AccountALambdaReadWrite",

"Effect":"Allow",

"Principal":{

"AWS":"<LambdaRoleArn>"

},

"Action":"s3:GetObject",

"Resource":"<s3BucketArn>/*"

}

]

}

This should now allow lambda to successfully read from s3 bucket.

NOTE: If your s3 bucket is KMS encrpyted, then the KMS key also acts as a Resource being accessed by lambda Identity. So you have to attach resource based policy with the KMS key too.

KMS Key limitation

You cannot amend the permissions policy of AWS managed KMS keys. So you won’t be able to allow lambda to read from s3 bucket if its encrypted using AWS managed KMS key. Lambda is going to log following error;

An error occured (AccessDenied) when calling GetObject operation:The ciphertext refers to a customer master key that does not exist, that does not exists in thei region.

So when you want an Identity to access an excrypted Resource, make sure to always create and use your own KMS key (customer managed keys). Go to your newly created customer managed key from AWS dashboard, click on Key Policy and append following json;

{

"Sid":"Allow use of the key",

"Effect":"Allow",

"Principal":{

"AWS":"<LambdaRoleArn>"

},

"Action":[

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey"

],

"Resource":"*"

}

Read more learning articles;

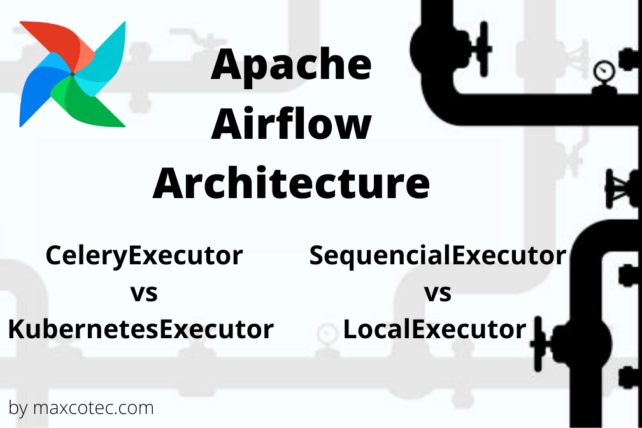

- Apache Airflow Architecture – Executors Comparison

- How to create a DAG in Airflow – Data cleaning pipeline

- How to Install s3fs to access s3 bucket from Docker container